Dockercon 2016 – Seattle: a personal report

A few weeks ago I shared on my blog a cleaned up conference report from Serverlessconf 2016.

Given the relatively good success of the experiment (some good feedback at “no cost” for me – copy and paste FTW!) I decided to take another shot.

This time I am sharing a report from Dockercon 2016 (in Seattle). This has been similarly polished by deleting comments that were not supposed to be “public”. You will see those comments being replaced by

As always, these reports are supposed to be very informal and surely includes some very rough notes. You have been warned.

What you will read below this line is the beginning of my original report (and informal notes).

_________________________

Executive Summary

These are, in summary, my core takeaways from the conference.

It’s all about Ops (and not just about microservices)

So far everything “Docker” has done, it has primarily catered to a developer audience.

But there were two very high level and emerging trends at Dockercon 2016.

- Trend #1: running containers in production

This was a very pervasive message from both Docker Inc. as well as all ecosystem vendors. It’s almost like if they realized that to monetize their technology they (also) need to start talking to Ops (on top of evangelizing Devs).

- Trend #2: how to containerize legacy applications

This was another pervasive trend at the show. The cynic in me is suggesting that this is also due to a monetization strategy. It feels like there are too many fish (vendors/startups) in such a (still) little pond (microservices) that if you can turn the pond into a sea they can all swim and prosper better.

Is it me or was Docker Cloud missing in action?

Completely unrelated, but at least as interesting, it is that Docker Cloud had very little coverage during the event. Docker acquired Tutum a while back and a few months ago they renamed their offering to Docker Cloud. Docker Cloud is supposed to be (at least according to the message given at Dockercon 2015 in Barcelona) the public cloud version (managed by Docker Inc.) of a Docker Datacenter instantiation on-prem (managed by the customer). Last year I pointed out that the two stacks (Docker Cloud and Docker Datacenter) were so vastly different that making them converge into a single stack would have been a daunting exercise. This year apparently Docker chose to not talk about it: it was not featured prominently in keynotes and did not have a dedicated breakout session (unless I missed it?). It is as if Docker decided to step back from it and focus on being a pure software company instead on being a cloud services provider (i.e. “if you want a Docker stack in the public cloud go get the bits and instantiate them on AWS or Azure”).

Technology focus (and then lack thereof)

Another interesting aspect of Dockercon 2016 is that a lot of technology focus has been put into the OSS version of the bits (i.e. Docker Engine 1.12) during the day 1 keynote. The technology focus on Docker Datacenter (the closed source “pay for” bits) during the day 2 keynote was not that big of a splash. They focused instead more on getting customers on stage and more on educating on the journey to production rather than announcing 20 new features of Docker UCP. Whether that is because Docker Inc. did not have 20 new features in Docker UCP or because they thought the approach they took was a better one, I don’t know.

Bare metal, Containers, VMs, etc

The architectural confusion re the role of bare metal, VMs, and containers reigns supreme. There is a lot of pre-constituted love, hate or otherwise bias towards which role each of these technology plays that, many times, it is hard to get involved in a meaningful and genuine architectural discussion without politics and bias getting in the way. All in all, the jury is still out re how this will pan out. Recurring patterns will only start to emerge in the next few years.

Serverless

During the closing keynote on Tuesday there were a number of interesting demos, one of which was about how to use Docker for Serverless patterns. What was interesting to me was not so much about the technical details but how Docker felt the need to rush and get back the crown of “this is the coolest thing around”. Admittedly the “Serverless movement” has started to steal some momentum from Docker lately by burying (Docker) containers under a set of brand new consumption patterns that would NOT expose a native Docker experience. I’d speculate that Docker felt a little bit frightened by this and is starting to climb back up to remain at the “top of the stack”. The Serverless community made an interesting counter argument.

Docker and the competition

Docker is closing some of the gaps they had compared to other container management players and projects (Kubernetes, Rancher, Mesos, etc). Docker has a huge asset in Docker Engine OSS and with the new 1.12 release they have made a lot of good improvements. I am only puzzled that they keep adding juice to the open source (and free) bits (Vs to UCP) thus posing a potential risk to their overall monetization strategy. But then again when you fight against the like of Rancher and Kubernetes that make available (so far) all they do “for free”, it’s hard to take a different approach. On a different note, the anti-PaaS attitude continues strong by pointing out that the approach is too strict, too opinionated and doesn’t leave the freedom to the developers to make the choices the developers may want to make.

Interesting times ahead for sure.

Docker and VMware

Expo

The floor was well attended and the (main) messages seem to be aligned to those of Docker itself. The gut feeling is that there was slightly less developers love and more Ops love (i.e. running containers in production and containerize traditional applications).

Monday Keynote (rough notes)

Dockercon SF 2014 had 500 attendees, Dockercon SF 2015 had 2000 attendees, Dockercon Seattle 2016 had 4000 attendees. Good progression.

Ben Golub (Docker Inc. CEO) is on stage.

He suggests that 2900+ people are contributing to Docker (the OSS project).

Docker Hub has 460K images and has seen 4.1B pulls to date.

Ben claims 40-75% of Enterprise companies are using Docker in production. I call this BS (albeit one has to ask what production means).

Solomon Hykes (Docker CTO) gets on stage and starts with an inspirational speech re how docker enables everyone to change the world building code etc.

Solomon suggests that the best tools for developers:

- Get out of the way (they enable but you need to forget about them)

- Adapt to you (this sounds like a comment made against opinionated PaaS vendors)

- Make powerful things in a simple way

Interesting demo involving fixing live a bug locally (on Docker for Mac) in the voting app and deploying the app again in the staging environment (building / deploying automatically triggered by a GitHub push).

CTO of Splice is on stage (this is a good session but a “standard talk” re why Splice loves Docker). Surely the value here is not so much content but rather having a testimonial talking about how they use Docker for real.

Orchestration is the next topic. Solomon claims the technology problem is solved but the problem is that you need an “army of experts”.

Interesting parallel: orchestration today is like containers 3 years ago: solved problem but hard to use.

Docker 1.12 includes lots of built-in orchestration features out of the box.

- Swarm mode: docker engine can detect other engines and form a swarm with a decentralized architecture. Note this seems to be a feature of the daemon Vs implemented with containers (as it used to).

- Cryptographic node identity: every node in the swarm is identified by a unique key. This is out of the box and completely transparent.

- Docker Service API: dedicated/powerful APIs for Swarm management.

- Built-in Routing Mesh: overlay networking, load balancing, DNS service discovery (long overdue).

Now Docker 1.12 exposes “docker create service” and “docker service scale” commands which sound very similar to the concept of Kubernetes Pods (in theory).

In addition, the routing mesh allows EACH docker host to expose a map of the container port on every host.

Last but not least, you can scale the containers and the built-in load balancer will help load balance the n instances of the container.

The “service” will enforce that the the right number of containers running on the swarm is enforced (the demo involves shutting down a node and all containers gets restarted on a different node to honor the committed number of containers running).

The speaker uses the word “migrated” referring to containers restarted on other nodes but (I think) what really happens is that another stateless container is started on the new host. There is no data persistency out-of-the-box (for now at least) that I can see.

Again, this is all very Kubernetes-ish to the point that it feels like the original comment of “needing an army of experts to do proper orchestration” is addressed to Kubernetes itself (my speculation).

Zenly (@zenlyapp) is on stage.

They claim that public cloud is expensive (at their scale) and they decided to move from (GCP) to on-prem (on bare-metal). They don’t mention why bare-metal (albeit their use case is so well defined that their “single app” focus does not need the flexibility that virtualization can provide in an otherwise more complex environment).

Solomon introduces the DAB (Distributed Application Bundle) as a way to package the containers comprising your distributed application as a bundled “app” (docker-compose bundle).

And here we are back full circle to distributing an app as a 500GB zip file (I am just kidding... sort of).

Docker for AWS and Docker for Azure are announced. This is a native Docker experience integrated with AWS / Azure (e.g. as you expose a port with a docker run the AWS ELB gets configured to expose that port). From the demo this Docker for AWS looks like a CloudFormation template.

The demo involves the IT person to deploy a 100 nodes swarm cluster with the integration and showing his familiarity with all AWS terminology when it comes to Ops (keys, disks, instances, load balancers etc).

They copy the voting app DAB file to an AWS instance (docker host) and they “docker deploy instavote” from that EC2 instance.

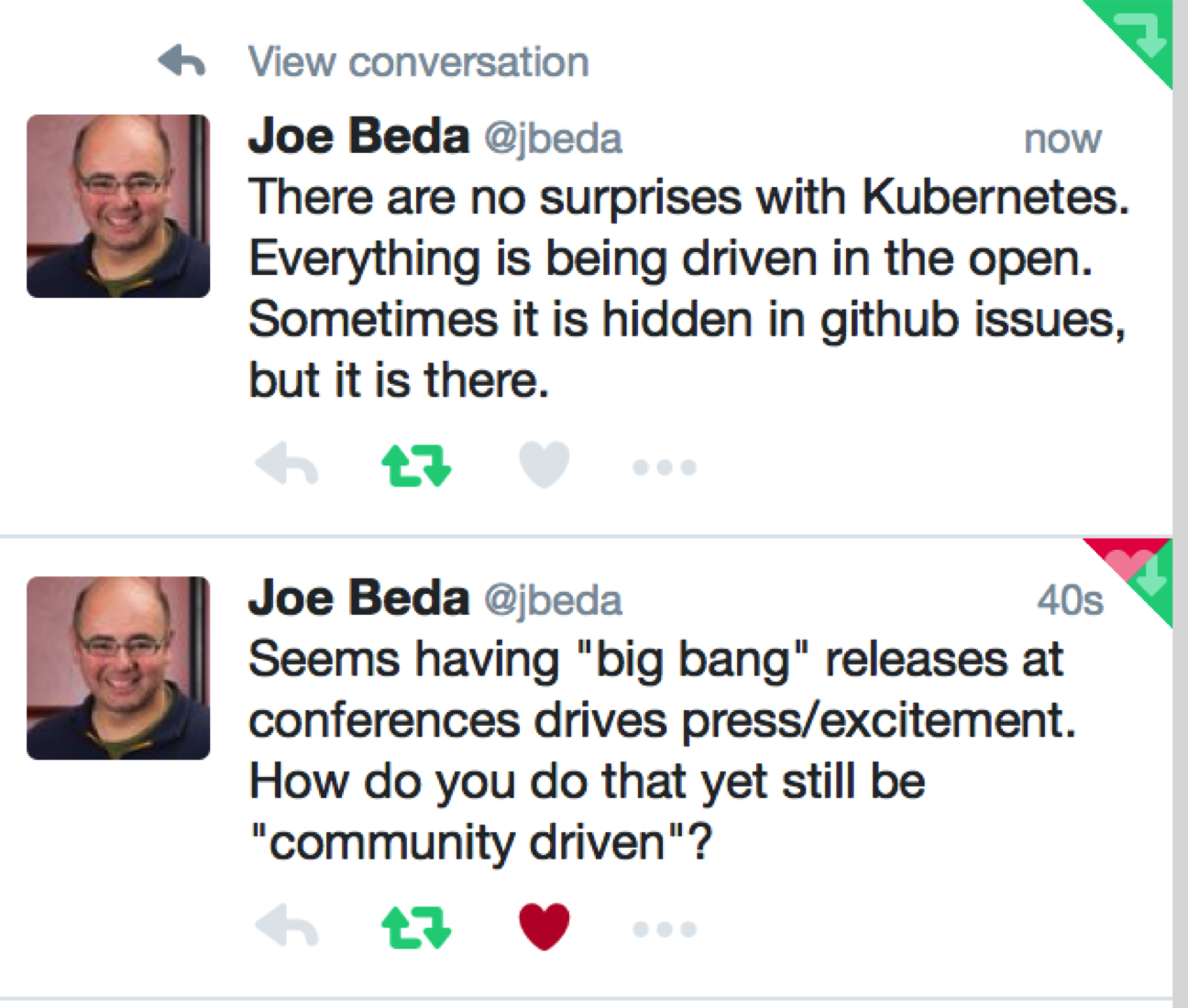

All in all, the first day was completely devoted to the progresses being made in the Docker OSS domain. In general, this was very well received even though someone was wondering if this is how you actually run OSS projects:

Tuesday Keynote (rough notes)

Yesterday was all about democratizing docker for developers and individuals.

Today it is all about democratizing docker for Enterprises.

Ben Golub is back on stage and he talks about the fallacies of “Bimodal IT”, “PaaS”, “Private IaaS”.

Docker isn’t about a revolution but rather an evolution.

“PaaS is too high, IaaS is to low, CaaS is just about right”.

He introduces Docker Datacenter.

Docker won’t impose infrastructure models nor will impose higher level management frameworks etc.

Very entertaining demo on Docker Datacenter covering RBAC, security vulnerability scanning, fixing issues and rolling updates.

Only thing is… all is being talked about / demoed is often around stateless applications / services. Data persistency in these environments is still the elephant in the room.

Ben gives a shout out to HP for shipping servers with Docker pre-loaded-whatever. No one (I talked to) seems to understand what this means in practice.

Now Mark Russinovich (MS Azure CTO) is on stage showing that Docker Datacenter is featured in the Market place.

Despite a semi-failed demo Mark killed it showing a number of things (Docker Datacenter on Azure with nodes on Azure Stack on-prem with the app connecting to a MSSQL instance running on-prem on Ubuntu).

Surely MS wanted to show the muscles and a departure from the “it must be Windows only” approach.

The only thing that stood out for me is that they have decided NOT to show anything that relates to running containers on Windows (2016). That was an interesting choice.

Mark is done and now the CTO of ADP is on stage talking about their experience. He claims that every company is becoming a software company to compete more effectively.

In an infinite race among competitors “speed” is more important than “position” (i.e. if you are faster, in the long run you will overtake your competition (no matter what).

Coding faster isn’t enough. You need to ship faster.

He makes an awesome parallel with the F1 race circuit: cars cost $30M and pilots are celebrities BUT the people at the pits (IT?) are what determine whether the pilot will win or lose depending on whether the pit stop is going to be 1.5 seconds or 5 seconds. THAT can make (and will make) the difference.

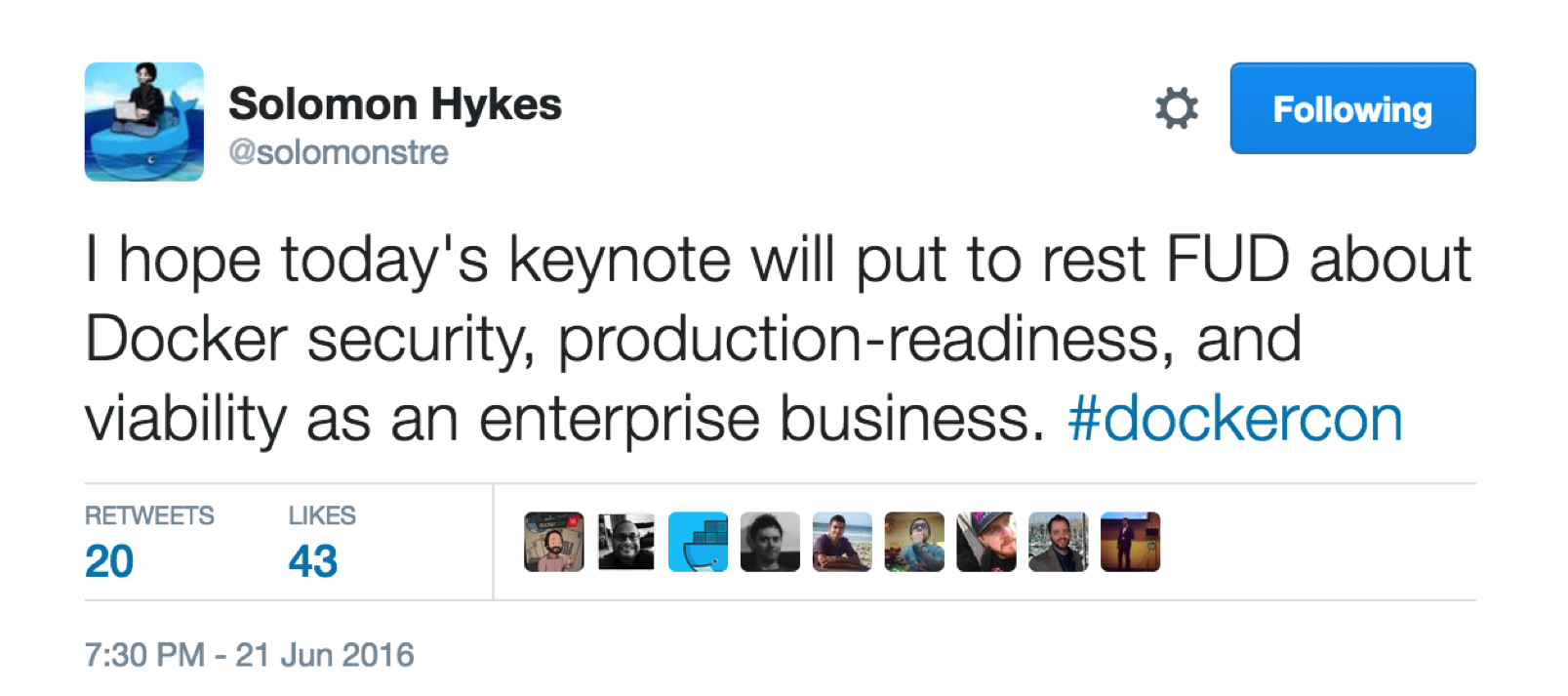

This keynote was all about “running Docker in production”. Solomon made sure the message was well received with a tweet:

Breakout Sessions (rough notes)

What follows is a brief summary of some of the breakout sessions I attended.

Containers & Cloud & VMs: Oh my

The content seems to be a superset of a blog post Docker published weeks ago: https://blog.docker.com/2016/03/containers-are-not-vms/VMs.

Quote: “People think about containers as lightwave virtualization and that’s so wrong”.

Some people go bare metal. Some people go virtualized. How did they decide? It depends.

So many variables:

- Performance

- Security

- Scalability

- Existing skillsets

- Costs

- Flexibility

- Etc

If you can’t fill up an entire server with containers go with VMs.

If you are using mixed workloads you may want to consider VMs.

If your apps are latency sensitive, consider bare metal.

If you are looking for DR scenarios, consider go with VMs (vSphere is called out specifically).

If you need Pools / Quotas consider VMs.

If you want to run 1 container per docker host, consider running in VMs.

Interesting comment: dockerizing monolithic applications isn’t a best practice but gives you portability for gen 2 applications.

Overall the session content was a lot of common sense (which needs to be reiterated anyway).

The closing is interesting: “I wish I could give you a precise answer to the question but reality is that we are in the early days and patters / best practices aren’t yet defined.”

I couldn’t agree more with the above view from the speaker. There is no clear pattern emerging and everyone is doing what they think it’s the right thing to do.

Answering a question from the audience the speaker claims that security being weaker in containers Vs VMs is just FUD.

Joyent

The session was interesting. You can feel there are some serious talents at Joyent but they seem to be very opinionated re how things should be done (perhaps not a bad thing).

In this session the topic was database persistency and I walked in convinced they would end up talking about docker volumes. Ironically, in the end, they said docker volumes were a big no no and their suggested approaches were database technologies that could self-heal by leveraging native sharding and/or replication support on ephemeral containers.

They were one of the few vendors that did not buy into the “you can dockerize your monolith” new approach Docker and others showed at the conference.

Docker in the Enterprise

This session was delivered by ex-VMware Banjot C (now PM for Docker Datacenter).

Introduction to the value proposition of CaaS (Build / Ship / Run).

Docker Datacenter is Docker’s implementation of a CaaS service.

Goldman Sachs, GSA and ADP are brought up as customers using docker.

Enterprise can start their journey either with monolith applications or microservices applications.

Patterns:

- Containerizing monolith is useful for build/test CI and or better Capex/Opex than VMs.

- Containerizing monolith and then decompose it into microservices

- Containerizing microservices

The value prop for #1 is that you can now truly port your application everywhere.

Other customers’ numbers:

Swisscom went from 400 MongoDB VMs to 400 containers in 20 VMs.

This session was more of an educational session on how to approach the Docker journey for production. There was little information being shared re Docker Datacenter (the product).

Apcera: Moving legacy applications to containers

This session was interesting (in its own way) as it was a VERY Ops oriented talk re how you profile your legacy app and try to containerize it. Kudos for never mentioning Apcera throughout the talk.

Chef

Interesting session on orchestration (“promise based theory” etc) that concludes with a Chef Habitat demo.

Docker for Ops

Breakout session on running docker in production.

A large part of the session is common sense CaaS / Docker Datacenter concepts (some of which have been covered in the keynote).

The message of “control” is explained in further details. RBAC is described.

Also the process of IT controlling the image life cycle is described. IT uploads “core” images into the local registry and Devs consume those core images and build their apps on top of them (yet uploading to the local registry).

UCP is the piece that actually controls the run-time and controls who can run and see what.

DTR is the piece that determines the authenticity of images and also determines who owns what and who gets access to what.

AWS: Deploying Docker Datacenter on AWS

In this session the AWS solutions engineer shows how to deploy Docker Datacenter on AWS.

Interesting: UCP nodes and DTR nodes leverage the auto-recovery EC2 feature (think vSphere HA) because they are “somewhat stateful” and so the recovery is easier if the instances recover with their original disk / IP address / personality etc.

That was an interesting point that made me think. If not even Docker can design/build from scratch a truly distributed brand-new application, it tells a lot about the challenges of“applications modernization”.