The 93.000 Firewall Rules Problem and Why Cloud is Not Just Orchestration

A few days ago I was in a very interesting meeting with a big Service Provider in Europe and I heard a lot of interesting comments. I'd like to quote the best that I heard which was "Oh a portal? Oh not another one... we have many of them already!" but this will open up a different can of worms so I am not going to talk about this now. What I am going to talk about relates to another comment someone made in the middle of the meeting which was "...there is a firewall with 93.000 rules configured".

I can't say to be a security expert by any stretch, however they sound a lot to me. This was confirmed by someone with a lot of background in this area saying that "... they are a lot but the record is a Cisco device (somewhere on this earth) with 750.000 rules". Suddenly someone else jumped into the discussion asking "...and what happens when you fat finger rule #457.986?". I thought this was a joke (however I am not sure).

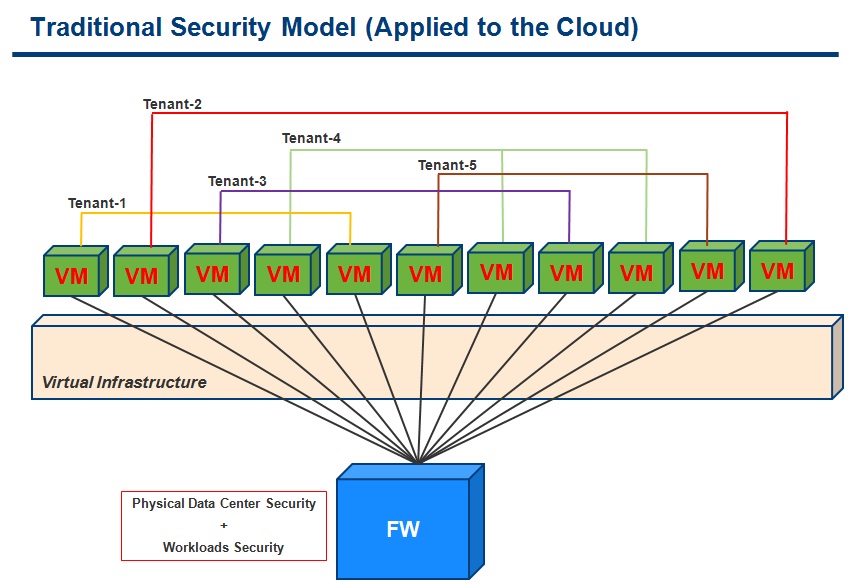

Before we make any step further, let's try to dump, in a picture, the layout of this scenario (at a very high level):

Basically the idea, pretty common these days, is that you have a multi-tenant virtual infrastructure with a number of VMs running on top of it. These VMs belong to different customers and, by means of standard layer 2 segregations (VLANs if you will), you keep them separate. The big (BIG) firewall at the bottom of the picture is the one that is holding the 93.000 rules that govern how these workloads talk to each others. By the way this doesn't appear obvious in the picture but each customer could (and will!) have more than one single VLAN because that's how it works in this world (see below). So 93.000 firewall rules is just the tip of the iceberg... there are other problems these Service Providers are dealing with which are, for example, the sprawl of VLANs - along with all sort of issues associated with that.

So why is this a problem for an IaaS cloud? I think there are at least a couple of dimensions to this problem.

Manageability, serviceability and scalability

The first dimension relates to "how on earth can you deal with such a beast?". How do you manage this firewall but, even more importantly, how do you troubleshoot it? That's why I am not sure that the person that referred to the "fat finger" problem was really joking. Again, my background is not security so bear with me and please advice where I am missing something. However, whenever I mention situations like these to people that do have a security background their typical reaction is:

- they laugh first....

- ...and scratch their head then.

So there must be something wrong somewhere, I think.

For sake of clarity, I am not bashing the firewall administrator that configured 93.000 in that box. I think that the problem is how networks (and related security) have been working until now and the associated "best practices" we built in the last 10 years. One could write a book on this but, in a nutshell, the way it works is that, to secure "services", you need to create layer two domains (aka VLANs) that you connect by means of a firewall. Depending on what you need you may have to create subnet-based rules and/or IP-based rules. Take this approach and apply it to a Service Provider with thousands of customers each with a certain amount of "services" deployed, and before you realize what's going on you get to thousands of firewall rules in a blink of an eye.

End-user self-service

The other dimension of the problems we are discussing strictly pertains to self-service, a key concept of paramount importance in all cloud related discussions. This is a pattern I have seen over and over again at every single Service Provider I have met so far: the usage of a central monolithic firewall to serve multiple different tenants doesn't allow the SP to create (easily) a self-service experience for the user. Why? Simply because the more complex and more critical the object (whose functionalities you want to expose to the end-user) becomes, the more complex and critical the tool that mediates its access needs to be. You could solve this problem by using a dedicated physical firewall per each of the customers the SP is hosting. That would reduce the complexity and the criticality to a level for which the effort of the SP would be as low as telling the customer "Here is how to access the device as root". Between the lines you could read "Screw it up and only your own organization will be screwed up, I don't care". It sounds great but this isn't very scalable nor manageable obviously. Do you deploy a new physical firewall every time you get a new customer? Not the promise of cloud I'd say if cloud is really about agility, scalability, pay-per-use and the list of attributes goes on. These attributes have, in fact, very little to do with the option of deploying a new physical device on-the-fly when needed.

So what did all these SPs do when they stood up their so called... "clouds"? They created a portal (probably one of those many we were talking about at the beginning) where they gave some self-service capabilities to do basic and simple stuff (such as VMs provisioning) and they implemented a ticket system for more advanced stuff (such as creating network security rules for the workloads they were provisioning). Not very different from how you'd do it with a traditional hosting solution you may think. Well that's one of the reasons many people refer to this practice as "lipstick on a pig" (i.e. take a hosting solution, put a cloud label on it and sell it as if it was a cloud).

The role of the orchestrator

I always say that orchestration is not cloud but cloud needs orchestration. Will orchestration alone help solving the problems we are discussing here? I don't personally think so. I see orchestrators more like tools that are supposed to solve operational issues (especially at the level of scaling a cloud infrastructure requires) not like tools that can fix broken architectures. If you take a stone and clean it, it doesn't become a gold nugget automagically. It becomes a cleaned stone. Same thing goes for cloud. If you take a "junk architecture" and you orchestrate it, does it become a "great architecture"? No, it becomes an "orchestrated junk architecture". Better than having to deal with it manually... but still "junk".

Don't get me wrong, I do think that orchestration is key and you can't have a cloud without (at least a certain degree of) orchestration. However don't think that a properly architected cloud is just your "legacy" stuff with an additional kilo of orchestration workflows and a nice new portal ("Oh a portal? Oh not another one... we have many of them already!").

Is there a way out?

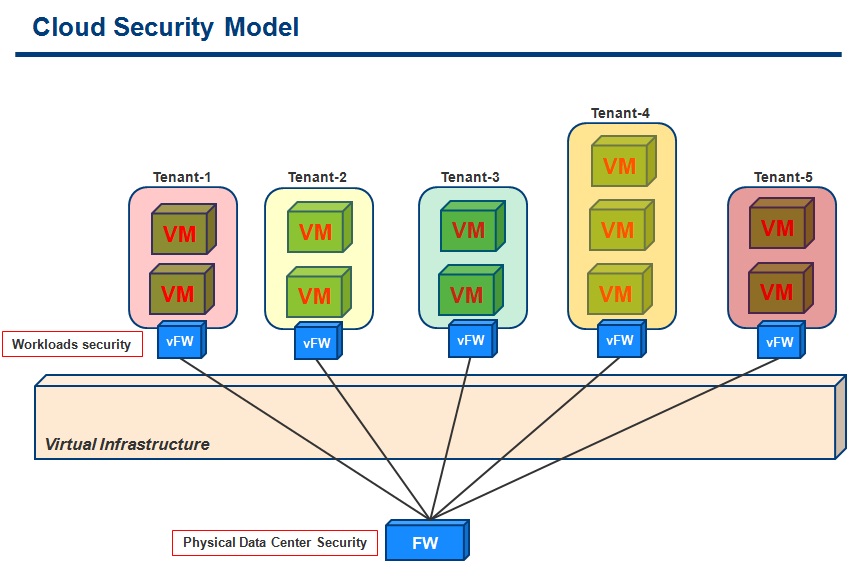

Yes there is (I think). I believe there is a shared feeling in the industry, at this point, that an architecture as shown in the picture below is the way to go forward. So what is that vFW (aka virtual Firewall) below? At VMware we call it vShield Edge. Other vendors may call it differently. Other vendors don't have anything like this today (so expect some level of bashing from their sales rep in the field) but they may end-up having it down the road (expect some level of embarassement from the same sales rep that bashed this approach in the past). We started shipping vShield Edge less than a year ago but we have seen a huge number of people experimenting with an approach like this for years. Just recently I have met another SP that said that 2 years ago they started looking into something like this using virtual appliances from Vyatta. Just recently I wrote about a small business partner getting into the "cloud" from a provider perspective and using the same model/architecture without anyone telling them this was "the right" model: they figured this out themselves based on the challenges they were dealing with! And if this isn't enough to convince you that there is a trend here, look at what Amazon has started to pitch a couple of weeks ago.

So what's so neat about this model? The idea is pretty simple: instead of using a monolithic physical firewall outside of the virtual infrastructure domain, you can deploy different virtualization-aware firewalls that are essentially backing the same VLAN(s) but do that in a more flexible and agile way. Other than simplifying the complexity of a single object configuration (the "93.000 rules" problem) you also gain easy self-service through administration delegation. As we have said at the beginning it is difficult to get controlled access to a shared device. However if you create a virtual device that is only supposed to "rule" access to given VLANs dedicated to a customer... you can easily delegate full access for that virtual device to that specific customer. This is at at the core of the vCloud Director self-service capabilities. In many cases you'd still want to have the traditional physical device for data center level protection against external attacks and advanced firewall features that these virtual firewall may be missing today. However the complexity of its configuration would be drastically reduced because the workloads security rules would be managed directly on the virtual firewall devices.

Can we do even better?

We could do something better, yes! What we have been talking about so far is, basically, all about keeping the very same number of VLANs and firewall rules.. and spread these rules across virtual firewalls. This solves a lot of problems when it comes to self-service for example (delegation of the entire device) and scalability (just deploy another virtual appliance when there is a new customer) but it doesn't really solve itself the problem of VLAN sprawl and the 93.000 firewall rules (although they are now segmented in different and dedicated security domains per each customer). VMware has other technologies that may help to address these other problems.

The first one is called vCloud Director Network Isolation (vCDNI) in vCloud parlance or vShield PortGroup Isolation (PGI) in vShield parlance. It's, basically, a technology that allows you to virtualize a VLAN. This allows different customers to be assigned dedicated vDS PortGroups that represent separate layer 2 domains... yet sharing the same VLAN ID. We use a technique called MAC-in-MAC to implement this. Kamau just posted a very interesting blog on how this works. You can read more here if you are interested. This technology is already available and fully integrated in vCloud Director so you can use it today if you want to.

There is another elegant method to solve the VLAN sprawl problem and, more specifically, the proliferation of rules you have to create in the firewall(s). This can be achieved with another vShield technology called vShield App. Think of vShield App as a vDS port-based firewall where you can say "this vNic can talk to this other vNic over this particular port". The vNics in question are connected to the same vDS PortGroup (i.e in essence one single layer 2 domain). So imagine having a single network segment where you can create rules that mimic the deployment of a DMZ, an Application security zone, a Database security zone, etc etc. Instead of using three VLANs (in this example) you could use one and have this segmentation happening at the vDS layer via vShield App rules. The cool thing about App, in my opinion at least, is that it supports both the typical 5-tuple firewall rules as well as it works with traditional vSphere constructs such as datacenters, clusters, resource pools and things like that. So that you can say that all VMs that are in this "container" can only communicate with VMs that are in this other "container" over a specific port. This way you can change IPs, add/remove VMs from the containers and the security policies will still apply simplifying and reducing the "93.000 rules problem". For sake of clarity this vShield technology (App) isn't integrated (today) with vCloud Director but I hope you see a trend here.

Now imagine combining vCDNI with vShield App. You could - potentially - use one single VLAN to support multiple tenants, and within each "virtual VLAN" you can create rules that represent multiple security zones effectively mimicking DMZ's, back-end's etc.

Conclusions

While I focused a lot on the products I am working with at the moment, the message that I wanted to pass along with this post is that the current network security model seems to be broken, in a big way. Especially if you think about it in the scope of cloud-like deployments where agility and self-service are big mantras. There are alternative architectures that are proving to be better in this context and there is a range of products that can implement that new architecture. I mentioned vShield and vCloud Director but you can use other products if you want... as long as you fix that junk! The other point I was trying to make in this post is that orchestration itself cannot fix a bad architecture and these two topics (architecture and orchestration) should really be considered two separate workstreams when you design your cloud infrastructure. Once again, orchestration is not the means by which you can fix a bad architecture layout.

Now I talk like if I knew what I was saying. Funny.

Massimo.